Interactive work#

The login nodes of Grex are shared resources and should be used for basic operations such as (but not limited) to:

- edit files

- compile codes and run short interactive calculations.

- configure and build programs (limit the number of threads to 4: make -j4)

- submit and monitor jobs

- transfer and/or download data

- run short tests, … etc.

In other terms, anything that is not CPU, nor memory intensive [for example, a test with up to 4 CPUs, less than 2 Gb per core for 30 minutes or less].

It is very easy to cause resource congestion on a shared Linux server. Therefore, all production calculations should be submitted in the batch mode, using our resource management system, SLURM. It is possible to submit so-called interactive jobs: a job that creates an interactive session but actually runs on dedicated CPUs (and GPUs if needed) on the compute nodes rather than on the login servers (or head nodes). Note that login nodes do not have GPUs.

Such mode of running interactive computations ensures that login nodes are not congested. A drawback is that when a cluster is busy, getting an interactive job to start will take some queuing time, just like any other job. So, in practice interactive jobs are to be short and small to be able to utilize backfill-able nodes. This section covers how to run such jobs with SLURM.

Note that manual SSH connections to the compute nodes without having active running jobs is forbidden on Grex.

Interactive batch jobs#

To request an interactive job, the salloc command should be used. These jobs are not limited to a single node jobs; any nodes/tasks/gpus layout can be requested by salloc in the same way as for sbatch directives. However, to minimize queuing time, usually a minimal set of required resources should be used when submitting interactive jobs (less than 3 hours of wall time, less than 4 GB memory per core, … etc). Because there is no batch file for interactive jobs, all the resource requests should be added as command line options of the salloc command. The same logic of --nodes=, --ntasks-per-node= , --mem= and --cpus-per-task= resources as per batch jobs applies here as well.

For a threaded SMP code asking for 6 cores for two hours:

salloc --nodes=1 --ntasks=1 --cpus-per-task=6 --mem=12000M --partition=skylake --time=0-2:00:00For an MPI jobs asking for 48 tasks, irrespectively of the nodes layout:

salloc --ntasks=48 --mem-per-cpu=2000M --partition=skylake --time=0-2:00:00Similar to batch jobs , specifying a partition with --partition= is required. For more information, see the page .

Interactive GPU jobs#

The difference for GPU jobs is that they would have to be directed to a node with GPU hardware:

The GPU jobs should run on the nodes that have GPU hardware, which means you’d always want to specify --partition=gpu or --partition=stamps-b.

SLURM on Grex uses the so-called “GTRES” plugin for scheduling GPU jobs, which means that a request in the form of --gpus=N or --gpus-per-node=N or --gpus-per-task=N is required. Note that both partitions have up to four GPU per node, so asking more than 4 GPUs per node, or per task, is nonsensical. For interactive jobs, it makes more sense to use ia single GPU in most of the cases.

For an interactive session using two hours of one 16 GB V100 GPU, 4 CPUs and 4000MB per cpu:

salloc --gpus=1 --cpus-per-task=4 --mem-per-cpu=4000M --time=0-2:00:00 --partition=stamps-bSimilarly, for a 32 GB memory V100 GPU:

salloc --gpus=1 --cpus-per-task=4 --mem-per-cpu=4000M --time=0-2:00:00 --partition=gpuGraphical jobs#

What to do if your interactive job involves a GUI based program? You can SSH to a login node with X11 forwarding enabled, and run it there. It is also possible to forward the X11 connection to compute nodes where your interactive jobs run with --x11 flag to salloc:

salloc --ntasks=1 --x11 --mem=4000MTo make it work you’d want the SSH session login node is also supporting graphics: either through the -Y flag of ssh (or X11 enabled in PuTTy). If you are using Mac OS, you will have to install XQuartz to enable X11 forwarding.

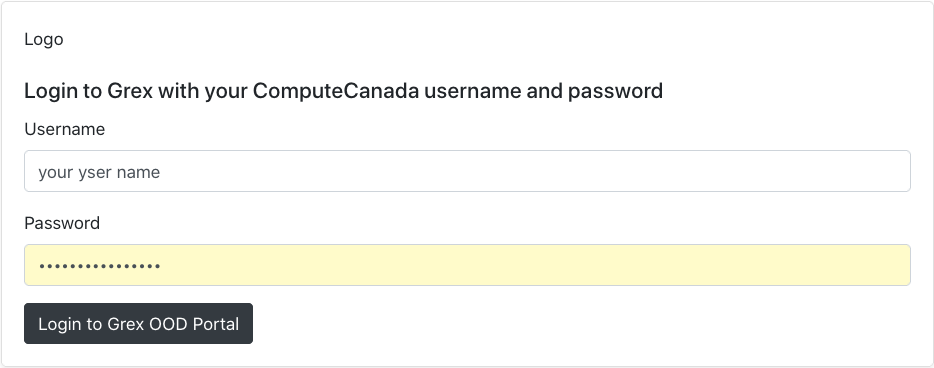

You may also try to use OpenOnDemand portal on Grex.