Introduction#

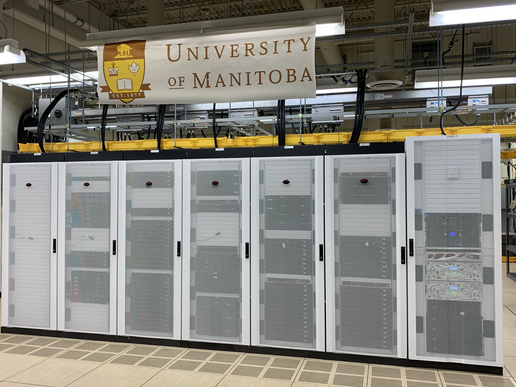

Grex is a UManitoba High Performance Computing (HPC) system, first put in production in early 2011 as part of WestGrid consortium. “Grex” is a Latin name for “herd” (or maybe “flock”?). The names of the Grex login nodes (bison , yak ) also refer to various kinds of bovine animals.

Since being defunded by WestGrid (on April 2, 2018), Grex is now available only to the users affiliated with University of Manitoba and their collaborators.

If you are a new Grex user, proceed to the quick start guide and documentation right away.

Hardware#

The original Grex was an SGI Altix machine, with 312 compute nodes (Xeon 5560, 12 CPU cores and 48 GB of RAM per node) and QDR 40 Gb/s InfiniBand network.

The SGI Altix machines were decommissioned in Sep 2024.In 2017, a new Seagate Storage Building Blocks (SBB) based Lustre filesystem of 418 TB of useful space was added to Grex.

The SBB that serves as /scratch is not available.In 2020 and 2021, the University added 57 Intel CascadeLake CPU nodes, a few GPU nodes, a new NVME storage for home directories, and EDR InfiniBand interconnect.

On March 2023, a new storage of 1 PB was added to Grex. It is called /project filesystem.

On January 2024, the /project was extended by another 1 PB.

On Sep 2024, new AMD Genoa nodes have been added (30 nodes with a total of 5760 cores).

During 2025, two new GPU nodes with 2 L40S GPUs were added to Grex.

The current computing hardware available for general use is as follow:

Login nodes#

As of Sep 14, 2022, Grex is using UManitoba network. We have decommissioned the old WG and BCNET network that was used for about 11 years. Now, the DNS names use hpc.umanitoba.ca instead of the previous name westgrid.ca.

On Grex, there are multiple login nodes:

- Yak: yak.hpc.umanitoba.ca (please note that the architecture for this node is avx512)

- Bison: bison.hpc.umanitoba.ca (a second login nodes similar to Yak)

- Grex: grex.hpc.umanitoba.ca is a DNS alias to the above Yak and Bison login nodes

- OOD: ood.hpc.umanitoba.ca (only used for OpenOnDemand Web interface and requires VPN if used outside campus network)

To login to Grex in the text (bash) mode, connect to grex.hpc.umanitoba.ca using a secure shell client, SSH .

Compute nodes#

There are several researcher-contributed nodes (CPU and GPU) to Grex which make it a “community cluster”. The researcher-contributed nodes are available for others on opportunistic basis; the owner groups will preempt the others’ workloads.

The current compute nodes available on Grex are listed in the following table:

| CPU | Nodes | CPUs/Node | Mem/Node | GPU | GPUs/Node | VMem/GPU | Network (InfiniBand) |

|---|---|---|---|---|---|---|---|

| Intel Xeon 6248 | 12 | 40 | 384 GB | N/A | N/A | N/A | EDR 100GB/s |

| Intel Xeon 6230R | 43 | 52 | 188 GB | N/A | N/A | N/A | EDR 100GB/s |

| AMD EPYC 96541 | 31 | 192 | 750 GB | N/A | N/A | N/A | HDR 200GB/s |

| AMD EPYC 96541 | 4 | 192 | 1500 GB | N/A | N/A | N/A | HDR 200GB/s |

| AMD EPYC 96342 | 5 | 168 | 1500 GB | N/A | N/A | N/A | HDR 100GB/s |

| Intel Xeon 52183 | 2 | 32 | 180 GB | nVidia Tesla V100 | 4 | 32 GB | FDR 56GB/s |

| Intel Xeon 52184 | 3 | 32 | 180 GB | nVidia Tesla V100 | 4 | 16 GB | FDR 56GB/s |

| Intel Xeon 6248R5 | 1 | 48 | 1500 GB | nVidia Tesla V100 | 16 | 32 GB | EDR 100GB/s |

| AMD EPYC 7402P6 | 2 | 24 | 240 GB | nVidia A30 | 2 | 24 GB | EDR 100GB/s |

| AMD EPYC 7543P7 | 2 | 32 | 480 GB | nVidia A30 | 2 | 24 GB | EDR 100GB/s |

| AMD EPYC 93343 | 1 | 64 | 370 GB | nVidia L40S | 2 | 48 GB | HDR 200GB/s |

Storage#

Grex’s compute nodes have access to three filesystems:

| File system | Type | Total space | Quota per user | Quota per group |

|---|---|---|---|---|

| /home | NFSv4/RDMA | 15 TB | 100 GB | N/A |

| /project | Lustre | 2 PB | N/A | 5 TB |

In addition to the shared file system, the compute nodes have their own local disks that can be used as temporary storage when running jobs .

Software#

Grex is a traditional HPC machine, running Linux and SLURM resource management systems. On Grex, we use different software stacks .

Web portals and GUI#

In addition to the traditional bash mode (connecting via SSH), users have access to:

- OpenOnDemand : on Grex, it is possible to use OpenOnDemand (OOD for short) to login to Grex and run batch or GUI applications (VNC Desktops, Matlab, Gaussview, Jupyter, …)

Useful links#

- Digital Research Alliance of Canada (Alliance), formerly known as Compute Canada

- Alliance documentation

- Local Resources at UManitoba

- Grex status page

CPU nodes available for all users (of these, five are contributed by a group of CHRIM researchers). ↩︎ ↩︎

CPU nodes contributed by Prof. M. Cordeiro (Department of Agriculture). ↩︎

GPU nodes contributed by Prof. R. Stamps (Department of Physics and Astronomy). ↩︎

GPU nodes contributed by Prof. L. Livi (Department of Computer Science). ↩︎

GPU nodes contributed by Faculty of Agriculture. ↩︎

GPU nodes contributed by Prof. M. Cordeiro (Department of Agriculture). ↩︎